Linear shift registers with feedback. Feedback shift registers Linear feedback shift register

Read also

Shift register sequences are used in both cryptography and coding theory. Their theory is well developed; shift register-based stream ciphers were the workhorse of military cryptography long before the advent of electronics.

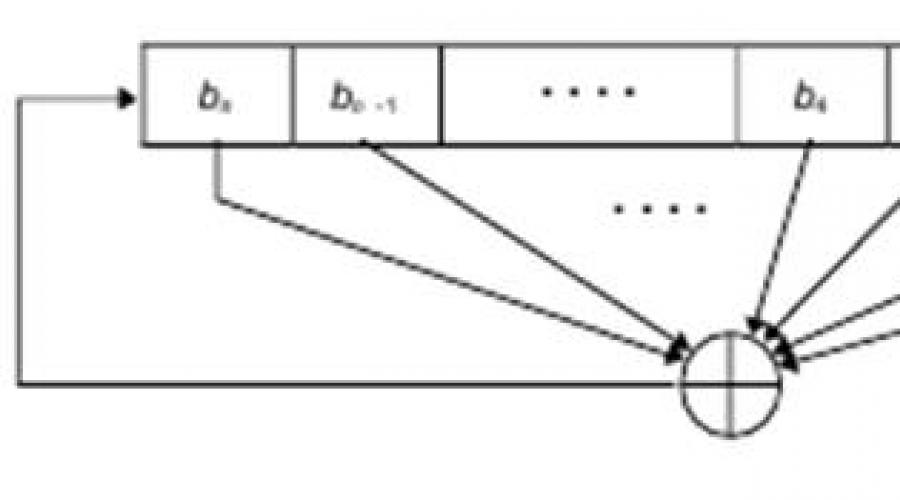

Shift register with feedback(hereinafter referred to as RgCsOS) consists of two parts: a shift register and a feedback function. A shift register is a sequence of bits. The number of bits is determined shift register length, if the length is n bits, then the register is called n-bit shift register. Whenever a bit needs to be retrieved, all the bits in the shift register are shifted to the right by 1 position. The new leftmost bit is a function of all the other bits in the register. The output of the shift register is one, usually least significant, bit. Shift register period is the length of the resulting sequence before its repetition begins.

Figure 1. Feedback shift register

Shift registers quickly found use in stream ciphers because they were easily implemented using digital hardware. In 1965, Ernst Selmer, chief cryptographer of the Norwegian government, developed the theory of the shift register sequence. Solomon Golomb, an NSA mathematician, wrote a book presenting some of his and Selmer's results. The simplest type of feedback shift register is a linear feedback shift register (LFSR). The feedback of such registers is simply an XOR (modulo two addition) of some register bits, a list of these bits called a tap sequence. Sometimes such a register is called a Fibbonacci configuration. Because of the simplicity of the feedback sequence, fairly advanced mathematical theory can be used to analyze PrCsVOC. By analyzing the resulting output sequences, you can verify that the sequences are random enough to be safe. RGCCLOS is used more often than other shift registers in cryptography.

Figure 2. PrCsLOS Fibbonacci

In general, an n-bit PrCsLOS can be in one of N=2 n -1 internal states. This means that theoretically such a register can generate a pseudo-random sequence with a period of T=2 n -1 bits. (The number of internal states and the period are equal to N=T max =2 n -1, because filling PrCsLOS with zeros will cause the shift register to produce an infinite sequence of zeros, which is absolutely useless). Only for certain branch sequences PrCsLOS will cyclically pass through all 2 n -1 internal states, such PrCsLOS are RgSsLOS with maximum period. The resulting result is called M-sequence.

Example . The figure below shows a 4-bit PrCCLOS with the first and fourth bits tapped. If it is initialized with the value 1111, then before repeating the register will take the following internal states:

Shift clock number (internal state) |

Register status |

Output bit |

|||

|

Initial value |

|||||

|

15 (return to initial state) |

|||||

|

16 (repeat states) |

The output sequence will be a string of least significant bits: 1 1 1 1 0 1 0 1 1 0 0 1 0 0 0 with a period T=15, the total number of possible internal states (except zero), N=2 4 -1=16-1= 15=T max, therefore the output sequence is an M-sequence.

In order for a particular PrCsLOS to have a maximum period, the polynomial formed from the tap sequence and constant 1 must be primitive modulo 2. The polynomial is represented as a sum of powers, for example, a polynomial of degree n is represented as follows:

a n x n +a n-1 x n-1 + … +a 1 x 1 +a 0 x 0 =a n x n +a n-1 x n-1 + … +a 1 x+a 0 , where a i =(0,1) for i=1…n, a x i - indicates the digit.

The degree of the polynomial is the length of the shift register. A primitive polynomial of degree n is an irreducible polynomial that is a divisor of x 2n?1 +1, but is not a divisor of x d +1 for all d that are divisors of 2 n -1. The corresponding mathematical theory can be found in.

In general there is no simple way generate primitive polynomials of a given degree modulo 2. The easiest way is to select a polynomial at random and check whether it is primitive. This is not easy and is a bit like checking to see if a randomly selected number is prime - but many math software packages can solve this problem.

Some, but certainly not all, polynomials of various degrees that are primitive modulo 2 are given below. For example, record

(32, 7, 5, 3, 2, 1, 0) means that the following polynomial is primitive modulo 2: x 32 + x 7 +x 5 + x 3 + x 2 + x + 1.

This can be easily generalized to PrCsVOC with a maximum period. The first number is the length of PrCsLOS. The last number is always 0 and can be omitted. All numbers except 0 specify a tap sequence, counted from the left edge of the shift register. That is, terms of a polynomial with lower degrees correspond to positions closer to the right edge of the register.

Continuing with the example, writing (32, 7, 5, 3, 2, 1, 0) means that for a given 32-bit shift register, a new bit is generated by XORing the thirty-second, seventh, fifth, third, second, and first bits , the resulting PrCsLOS will have a maximum length, cycling through 2 32 -1 values before repeating.

Figure 4. 32-bit PrCcLOS with maximum length

Let's consider the program code PrCsLOS, in which the tap sequence is characterized by a polynomial (32, 7, 5, 3, 2, 1, 0). In C it looks like this:

static unsigned long ShiftRegister = 1;

/* Everything except 0. */

ShiftRegister = ((((ShiftRegister >> 31)

^(ShiftRegister >> 6)

^(ShiftRegister >> 4)

^(ShiftRegister >> 2)

^(ShiftRegister >> 1)

^ShiftRegister))

| (ShiftRegister >> 1);

return ShiftRegister & 0x00000001;)

If the shift register is longer than a computer word, the code becomes more complicated, but not much. Appendix B contains a table of some primitive polynomials modulo 2; we will use it in the future to identify some properties of these polynomials, as well as in software implementation to specify the tap sequence.

Please note that all elements of the table have an odd number of coefficients. This long table is given for further work with RgCsLOS, since RgCsLOC is often used for cryptography with stream ciphers and in pseudorandom number generators. In our case, we can use polynomials with a highest degree of no more than seven.

If p(x) is primitive, then x n p(1/x) is primitive, so each element of the table actually defines two primitive polynomials. For example, if (a, b, 0) is primitive, then (a, a-b, 0) is primitive as well. If (a, b, c, d, 0) is primitive, then (a, a-d, a-c, a-b, 0) is also primitive. Mathematically:

if x a +x b +1 is primitive, then x a +x a-b +1 is primitive,

if x a +x b +x c +x d +1 is primitive, then x a +x a-d +x a-c +x a-b +1 is primitive.

Primitive trinomials are the fastest to implement in software, since to generate a new bit you need to XOR only two bits of the shift register (the zero term is not taken into account, i.e. x 0 = 1, see the example above). Indeed, all feedback polynomials given in the table are sparse, that is, they have few coefficients. Sparsity is always a source of weakness, which is sometimes enough to break the algorithm. For cryptographic algorithms, it is much better to use dense primitive polynomials, those with many coefficients. By using dense polynomials, especially as part of a key, much shorter PrCsLOS can be used.

Generating dense primitive polynomials modulo 2 is not easy. In general, to generate primitive polynomials of degree k, you need to know the factorization of the number 2 k -1. By themselves, PrCsLOS are good generators of pseudo-random sequences, but they have some undesirable non-random (deterministic) properties. Consecutive bits are linear, which makes them useless for encryption. For a PrCcLOS of length n, the internal state is the previous n output bits of the generator. Even if the feedback circuit is kept secret, it can be determined from the 2n output bits of the oscillator using high efficient algorithm

In addition, large random numbers, generated using consecutive bits of this sequence, are highly correlated and for some types of applications are not random at all. Despite this, RgCCLOS are often used to create encryption algorithms as components of systems and encryption algorithms.

- “Tetromino tennis”). He created and solved countless math puzzles and puns. About 20 years ago I learned that he was very close to discovering my favorite rule of 30 for cellular automata back in 1959, when I was just born.

How I Met Saul Golomb

Almost all the scientists and mathematicians I know came to know me through my professional connections. But not Sola Golomba. It was 1981, and I, a 21-year-old physicist (who had become a bit famous in the media for being the youngest recipient of the MacArthur Prize at the inaugural awards ceremony), was doing research at . There was a knock on the door of my office, and soon a young woman crossed the threshold. This was already unusual, because in those days, where theoretical high-energy physics was being studied, there were hopelessly few women. Although I had lived in California for several years, I had never left the campus and was ill-prepared for the burst of Southern California energy that burst into my office. The woman introduced herself as Astrid and said that she had attended Oxford and knew someone I had been with kindergarten. She explained that she had been tasked with gathering information about interesting people in the Pasadena area. I think she considered me a difficult case, but nevertheless insisted on talking. And one day when I was trying to say something about what I do, she said: " You must meet my father. He's already old man but his mind is still razor sharp "And it so happened that Astrid Golomb, Sol Golomb's eldest daughter, introduced me to him.The Golomb family lived in a house located in the mountains near Pasadena. They had two daughters: Astrid - a little older than me, an ambitious Hollywood girl, and Beatrice - about my age and scientific mind. The Golomb sisters often hosted parties, usually at their home. The themes were different: a party in the garden and croquet with flamingos and hedgehogs (" The winner will be the one whose costume best matches the stated theme"), or a Stonehenge-style party with instructions written in runes. At these parties, young and not so young people crossed paths, including various local luminaries. And at them, a little to the side, there was always a small man with a big beard, a little like looking like an elf and always wearing a dark coat - Solomon Golomb himself.

Gradually I learned a little about him. What he was involved in" information theory". That he worked at the University of Southern California. That he had various vague but apparently high-level government and other connections. I had heard about shift registers, but knew virtually nothing about them.

Here's what happens after a while:

As you can see, the shift register always shifts bits to the left and other bits are added to the right according to a simple rule. The sequence of bits appears to be random, although as shown in the figure, it eventually repeats itself. Sol Golomb found an elegant mathematical way to analyze such sequences and how they repeat.

If the shift register has size n, then he has 2 n possible states (corresponding to all possible sequences of 0 and 1 with a length n). Because the rules for a shift register are deterministic, any given position must always converge on another similar position. This means that the maximum possible number of steps that the shift register can go through before the steps start repeating is 2 n(actually 2 n- 1, since a position with all 0s cannot evolve into anything else).

In the example above, the shift register is of size 7 and will repeat in exactly 2 7 - 1 = 127 steps. But which shift registers - with which tap arrangements - will produce sequences of the maximum length? Solomon Golomb began to explore this question in the summer of 1954. And his answer was simple and elegant.

The shift register above has branches at positions 7, 6 and 1. Saul represented this algebraically using a polynomial X 7 + X 6 + 1. He then showed that the generated sequence will be of maximum length if this polynomial " irreducible modulo 2"; therefore it cannot be factorized, which makes it the prime number analogue of polynomials; and the presence of some other properties makes it a "primitive polynomial". Today this is easy to check using Mathematica and the Wolfram Language:

Back then, in 1954, Saul had to do it all by hand; he compiled a rather long table of primitive polynomials corresponding to shift registers that produced sequences of maximum length:

History of shift registers

The idea of maintaining random access memory through " delay lines", which transmit digital pulses, dates back to the beginning of the computer era. By the late 1940s, such delay lines were applied digitally using a series of vacuum tubes and were called " shift registers"It is not yet clear when the first feedback shift registers were created. It may have been in the late 1940s. However, this event is still shrouded in mystery because they were first used in military cryptography.The basic idea of cryptography is to change meaningful messages so that they cannot be recognized; however, if you know the key, you can recreate the encrypted message. So-called stream ciphers work on the principle of creating long sequences of seemingly random elements, and are decoded using a receiver that independently generates the same sequence of elements.

Linear feedback shift registers were prized in cryptography due to their long repeat periods. However, the mathematical analysis Saul used to find these periods makes it clear that such shift registers are not suitable for secure cryptography. However, at the beginning they seemed quite good (especially in comparison with the sequential rotor positions in Enigma); there were persistent rumors that Soviet military cryptosystems were built on this basis.

Back in 2001, when I was working on historical notes for my book A new kind of science, Saul and I had a long conversation on the phone about case shifts. Saul told me that when he started, he knew nothing about shift register cryptographic work. He said that people at Bell Labs, Lincoln Labs, and the Jet Propulsion Laboratory began working on shift registers around the same time as him; however, he managed to go a little further, which he noted in his 1955 report.

Over the following years, Saul gradually learned about various predecessors to his work from the literature on pure mathematics. As early as 1202, Fibonacci was talking about what are now called Fibonacci numbers, which are generated by a recurrence relation (which can be thought of as analogous to a linear feedback shift register, operating on arbitrary integers rather than ones and zeros). There is also small work from the early 1900s on the cyclicity of 0 and 1, but the first large-scale study was the work of Oystein Åre at the University of Oslo. Ore had a student named Marshall Hall who advised the predecessor of the National Security Agency in the late 1940s. - probably on the topic of shift registers. However, everything he did was classified, and so he arranged for Saul to publish the history of linear feedback shift registers; Saul dedicated his book to Marshall Hall.

What are the sequences generated by shift registers for?

I have noticed many times that systems defined by simple rules end up with many possible applications; Shift registers also follow this pattern. Both modern hardware and software are crammed with shift registers: a typical mobile phone probably has a dozen or two of them, usually implemented in hardware, and sometimes in software(when I write "shift register" here, I mean linear feedback shift register - LFSR).In most cases, those shift registers that produce sequences of the maximum length (otherwise known as " M-sequences"). The reasons why they are used generally have to do with some of their properties that Saul discovered. They always contain the same number of 0s and 1s (though in fact there is always exactly one extra 1). Saul subsequently showed that they it is also common to have the same number of sequences 00, 01, 10 and 11 - and for large blocks too. This property is ". balance"This in itself is already very useful - for example, if you test all possible combinations of bits as input.

However, Saul discovered another, even more important property. Replace every 0 in the sequence with a 1, then multiply each element in the shifted version of the sequence by the corresponding element in the original. Saul showed that when added, these elements will always be equal to zero (except in cases where there is no shift at all). That is, the sequence has no correlation with the shifted versions of itself.

These properties will be true for any sufficiently long random sequence of 0s and 1s. Surprisingly, for sequences of maximum length, these properties are always true. The sequences have some chaotic features, but in fact they are not chaotic at all: they have a well-defined, organized structure.

It is this structure inherent in linear feedback shift registers that makes them unsuitable for serious cryptography. But they are great for basic "element permutation" and low-cost cryptography, and are widely used for these purposes. Often the task is simply to “whiten” the signal (as in “white noise”). Sometimes you need to transmit data with long sequences of 0s. But in this case, the electronics can get confused if the “silence” is too long. You can avoid this problem by scrambling the original data by combining it with the sequence generated by the shift register. This is exactly what has been done with Wi-Fi, Bluetooth, USB, digital TV, internet, etc.

A side effect of shift register scrambling is more complex decoding, which is sometimes used to improve security (DVD technology uses a combination of 16-bit and 24-bit shift registers to encode data; many GSM phones use a combination of three shift registers to encode all signals).

Saul created the mathematical basis for all this, and also introduced a number of key figures to each other. As early as 1959, he met Irwin Jacobs, who had recently received his doctorate from MIT. He also knew Andy Viterbi, who worked at the Jet Propulsion Laboratory. Saul introduced them and in 1968 they founded a company called Linkabit, working on coding systems (mainly for military purposes).

In 1985, Jacobs and Viterbi founded another company called Qualcomm. They weren't doing particularly well at first, but by the early 1990s, when they began producing components to deploy CDMA in cell phones, the company began to grow rapidly.

So where are these registers?

It's surprising that most people have never heard of shift registers and yet interact with them every time they use modern systems communications, computer technology etc. It’s easy to get confused here, given that behind the different names and abbreviations are the same sequences of linear feedback shift registers (PN, pseudo noise, M-, FSR, LFSR sequences, MLS, SRS, PRBS, etc. .).When it comes to mobile phones, their use of shift register-generated sequences has varied over the years, increasing and decreasing. networks are based on TDMA, so they do not use sequences generated by shift registers to encode their data, but they still often use CRC (cyclic redundancy code) to check blocks of data. networks are the largest users of CDMA, so the sequences generated by the shift registers are involved in the transmission of every bit. networks typically use a combination of time and frequency slots that do not include shift register sequences, although CRCs are still used: for example, to interact with integral data when frequency windows overlap. has a more complex structure - with multiple antennas that dynamically adapt to use the optimal time and frequency of slots. However, half of their channels are typically allocated to "pilot signals" which are used to infer the local radio environment; they are also based on sequences generated by shift registers.

In electronics production, we usually try to achieve the most high speed data transmission with minimal energy consumption, allowing bits to overcome the noise threshold. And, as a rule, this path leads to automation of error detection - and therefore to the use of CRC (cyclic redundancy code) and, therefore, sequences generated by the shift register. This applies to almost all types of buses inside the computer (PCIe, SATA, etc.): ensuring interaction between parts of the central processor, or receiving data from devices, or connecting to a display with HDMI. In the case of disks or memory, CRC and other codes based on sequences generated by shift registers are also almost universally used to operate at maximum speed.

Shift registers are so ubiquitous that it is almost impossible to estimate how many bits they generate. There are approximately 10 billion computers, slightly fewer phones, and a huge number of devices in the embedded IoT (“Internet of Things”). Almost every car in the world (and there are more than a billion of them!) has about 10 built-in microprocessors.

At what frequency do shift registers operate? Communication systems have a base carrier frequency in the hertz range, as well as a "chip rate", which tells how quickly multiple accesses occur ( we're talking about o CDMA) in the MHz range. On the other hand, in buses inside computers, all data is transferred using shift registers - with the best transfer speed in the hertz range.

There are at least 10 billion communication lines running for at least 1/10 billion seconds (about 3 years) that use at least 1 billion bits from shift registers every second, which means that to date Saul's algorithm has been used at least an octillion times.

Is this the most commonly used algorithm? I think yes. I suspect that only arithmetic operations can compete. These days, processors are capable of processing a trillion arithmetic operations per second, and such operations are required for almost every bit generated by a computer. But how is arithmetic done? At some level, this is simply the implementation of digital electronics.

However, there are "algorithmic ideas" that remain unclear to everyone except microprocessor designers. When Babbage made his difference engine (see the article on Habré "Unraveling the story of Ada Lovelace (the first programmer in history)"), carries became a big hindrance in performing arithmetic operations (in fact, a linear feedback shift register can be thought of as a system that does something like arithmetic calculations but does not carry over). There are “transfer propagation trees” that optimize the transfer. There are also little tricks (like the "Booth algorithm", "Wallace trees", etc.) that reduce the number of bit operations required to create the "internals" of the arithmetic. But unlike linear feedback shift registers, there is simply no single algorithmic idea that can be used almost anywhere; so I think the longest sequences generated by linear feedback shift registers still win among the most used sequences.

Cellular automata and shift registers with nonlinear feedback

Although it may not seem obvious at first glance, it turns out there is a close connection between feedback shift registers and the cellular automata that I have studied for many years. The basic organization for a feedback shift register is to evaluate one bit at a time. In a cellular automaton, there is one line of cells, and at each step, all cells are updated in parallel, based on a rule that depends, for example, on the values of their nearest neighbors.To see how they are related, you need to run a size feedback shift register n, and at the same time display its state only every n steps; in other words, let all the bits be rewritten before they appear again. If each step of a linear feedback shift register is mapped (here with two taps), then at each step everything will shift to the left - that's it. But if you make a compressed picture, showing only every n steps, a pattern will become visible.

This is a nested pattern; and this picture is very similar to the one that can be obtained if a cellular automaton takes a cell and its neighbor and adds them modulo 2 (XOR). This is what happens to a cellular automaton if it arranges its cells so that they are in a circle the same size as the shift register above:

At first, cellular automata and shift register patterns turn out to be exactly the same. If you look at these pictures, it becomes less surprising that the mathematics of shift registers should have something to do with cellular automata. And given the repeatability of nested patterns, it's clear why there must be an elegant mathematical theory for shift registers.

Typical shift registers used in practice do not have such clearly repeating patterns. Here are some examples of shift registers that generate sequences of maximum length. The fact is that the branches are far apart, making it difficult to find visual traces of nesting.

How broad is the correspondence between shift registers and cellular automata? For cellular automata, the rules for generating new cell values can be anything. In linear feedback shift registers they should always be based on modulo 2 addition (or XOR). This is what the "linear" part of a "linear feedback shift register" means. It is also possible to use any rule to combine values for nonlinear feedback shift registers (NFSRs).

Indeed, when Saul developed his theory for linear feedback shift registers, he started with the nonlinear case. When he arrived at JPL in 1956, he received a laboratory complete with racks for small electronic modules. Saul said the modules (each about the size of a cigarette pack) were built for a Bell Labs project to perform a specific task. logical operation(AND, OR, NOT, ...). The modules can be used together to implement any desired nonlinear feedback shift registers, providing about a million bits per second (Sol told me that someone tried to do the same thing with universal computer, and what took 1 second using hardware modules took 6 weeks of work on a mainframe computer).

When Saul began studying linear feedback shift registers, his first major discovery was their repetition periods. And in the case of nonlinear ones, he devoted most of his efforts to trying to understand the same thing. He collected all kinds of experimental data. He told me that he even tested sequences 2 45 long, which took a year. He made a summary like the picture below (note the visualization of the sequences shown on the waveform line). But he never managed to come up with any general theory, which he had for shift registers with linear feedback.

No wonder he couldn't do it. As early as the 1950s, there were theoretical results (mostly based on Turing's ideas for universal computing) of what programs could in principle do this. I don't think Saul or anyone else ever thought that nonlinear feedback shift registers would use very simple (nonlinear) functions.

And only later it became clear how complex the behavior of even very simple programs. My favorite example is rule 30 for a cellular automaton, in which the values of neighboring cells are combined using a function that can be represented as R + q + r + q*r mod 2(or R XOR ( q OR r)). Incredibly, Saul was considering nonlinear feedback shift registers based on similar functions: R + G + s + q*r + q*s + r*s mod 2. Here below are illustrations of what Saul's function (which can be thought of as "rule 29070"), rule 30, and several other similar rules look like in a shift register:

And here they, not limited by a fixed-size register, look like cellular automata:

Of course, Saul never made pictures like this (and it was almost impossible to do in the 1950s). Instead, he focused on the repetition period as a kind of aggregate characteristic.

Saul wondered whether shift registers with nonlinear feedback could be sources of chaos. From what is currently known about cellular automata, it is clear that they can. For example, to create chaos for Mathematica, we used the 30 cellular automata rule for 25 years (though we recently abandoned it in favor of a more efficient rule that we found after studying trillions of possibilities).

Saul didn't talk much about encryption; although I think he only worked for the government for a short time. He told me that although in 1959 he discovered " multidimensional correlation attacks on nonlinear sequences"at the same time he" carefully avoided claims that the program was for cryptanalysis"The point is that rule 30 for cellular automata (and perhaps also nonlinear feedback shift registers) can be good cryptosystems - although due to the fact that they are seemingly equivalent to linear feedback shift registers (which is not so), they were never used as much as possible.

As an enthusiast, over the past few decades I have tried to study all the predecessors of my work on one-dimensional cellular automata. Two-dimensional cellular automata have been studied a little, but in the case of one-dimensional cellular automata, only one purely theoretical work has been found. I think of all the things I saw, Solomon Golomb's nonlinear feedback shift registers were the closest to what I ended up doing a quarter of a century later.

Polyomino

Hearing the name " Golomb ", many will remember shift registers. However, most will remember polyomino. Saul didn't invent polyominoes, although he did come up with the name. He made systematic what previously appeared only in individual puzzles.The main question Saul was interested in answering was how sets of polyominoes could be organized to cover some area. Sometimes it's quite obvious, and sometimes it's quite difficult. Saul published his first paper on polyominoes in 1954, but it was Martin Gardner who really made polyominoes popular in 1957 (he wrote a column about mathematical games in the magazine Scientific American). As Saul explained in the preface to his 1964 book, the result was " a constant stream of correspondents from all over the world and from all walks of life: chairmen of the boards of directors of leading universities, residents of unknown monasteries, prisoners from famous prisons...".

Game companies also took notice of the new puzzles, and within months there were headlines like " new sensational puzzles", followed by decades of other polyomino-based puzzles and games (no, the creepy bald guy doesn't look like Saul):

Saul continued to publish articles on polyominoes for another 50 years after the first publication. In 1961, he introduced "rep-tiles" that could be used to create fractal ("Infin-tile") patterns. But almost everything Saul did with polyominoes involved solving specific problems.

I have little interest in the specifics of polyominoes; I am interested in the more general phenomena associated with them. It seems that it can be decided with a few simple shapes It’s easy to “pave” the entire plane. But with polyominoes (and all the games and puzzles based on them), it's clear that things aren't that simple. And indeed, in the 1960s it was proven that this problem is theoretically unsolvable.

If we are only interested in a limited area, then, in principle, we can simply list all conceivable arrangements of figures, and then see if they are arranged as they should be. However, if we are interested in infinity, then this need not be done. Maybe someone will find a way to successfully place a million figures, but there is no guarantee that this result can be extended further.

It turns out that it might look like a working Turing machine - or a cellular automaton. You start with a line of tiles. Then the question of whether infinite tiling is possible is equivalent to the question of whether it is possible to set up a Turing machine that will enable it not to stop. The point is that if a Turing machine is universal (that is, it can be programmed to perform any possible computation), then the stopping problem for it may be undecidable, which means that the tiling problem will also be undecidable.

Of course, this depends on the original set of forms. The question is how complex the forms must be to encode universal computations and lead to an unsolvable tiling problem. Solomon Golomb was aware of the literature on this topic, but was not particularly interested in it.

Complex and carefully designed sets of polyominoes are known to actually support universal computation. But what about a simple set? Is it really simple enough to be stumbled upon by accident? If you look at all the systems that I studied, the simplest set really turns out to be simple. However, it is difficult to find.

A much simpler problem is to find polyominoes that successfully fill the planes, although only non-periodically. Roger Penrose found a suitable example in 1994. In my book A new kind of science I gave a slightly simpler example with 3 polyominoes:

The rest of the story

Saul was in his early thirties when he achieved notable success in the field of shift registers and polyominoes... He was a very active person. He wrote several hundred papers, some of which expanded on his earlier work, some of which were answers to questions he had been asked, and some of which were written, it seems, just for fun - to find out interesting things about numbers, sequences, cryptosystems, etc. d.Shift registers and polyominoes are extensive topics (they are even classified in separate categories in the AMS classification). In recent decades, they both received a new round of development when computer experiments began to be carried out on their basis; Sol also took an active part in them. However, many questions remain unanswered. And if large Hadamard matrices can be found for shift registers with linear feedback, then even now little is known about shift registers with nonlinear feedback, not to mention all the non-periodic and other exotic polyominoes.

Saul has always been interested in puzzles, both mathematical and word puzzles. For some time he wrote a puzzle column for Los Angeles Times, and for 32 years wrote " Golomb's Gambits" in the Johns Hopkins alumni magazine. He took part in MegaIQ testing and won a trip to the White House when he and his boss were ranked among the top five in the country.

He invested enormous effort in his work at the university: not only taught undergraduates and supervised graduate students and climbed the administrative ladder (president of the university council, vice-provost for research, etc.), but also expressed his opinions on the management of the university as a whole (e.g. wrote an article entitled “Faculty Consulting: Remove or Leave?”; Answer: no, it’s good for the university!). At the University of Southern California, he was a headhunter, and during his time there, he helped the university rise from obscurity to the top of the rankings of educational programs.

And then there was consulting. Saul was meticulous and did not reveal what he did for government organizations. In the late 1960s, frustrated that everyone but him was selling polyomino games, Saul founded a company he called Recreational Technology, Inc. Things weren't going too well, but Saul met Alvin Berlekamp, a Berkeley professor who was interested in coding theories and puzzles. They subsequently founded the company Cyclotomics (in honor of the cyclotomic polynomials of the form x n– 1), which was eventually sold to Kodak for a tidy sum (Berlekamp also created an algorithmic trading system, which he then sold to Jim Simons and which became the starting point for Renaissance Technologies, one of the largest hedge funds today).

More than 10,000 patents are in one way or another related to Saul’s work, but Saul himself received only one patent on quasi-group based cryptosystems - and I think he has done little to commercialize his work.

For many years Saul worked with the Technion, an Israeli institute of technology. He spoke of himself as " non-religious Orthodox Jew", but at the same time sometimes taught seminars on the Book of Genesis for beginners, and also worked on deciphering parts of the Dead Sea Scrolls (Qumran manuscripts).

Saul and his wife traveled widely, but Saul's "center of the world" was definitely his office in Los Angeles at the University of Southern California, and the home where he and his wife lived for nearly 60 years. He was always surrounded by friends and students. And he had a family. His daughter Astrid played the role of a student in a play about Richard Feynman (she posed for him), and in a friend's novel as one of the characters. Beatrice has dedicated her career to applying a mathematical level of precision to various types of medical conditions and diagnoses (Gulf War-related illnesses, statin effects, hiccups, etc.). I even made a small contribution to Beatrice's life by introducing her to the man who later became her husband - Terry Sejnowski, one of the founders of modern computational neuroscience.

Saul seemed to be involved in a lot of things, even if he didn't talk too much about the details. From time to time I wanted to talk to him about science and mathematics, but he was more interested in telling stories (often very exciting) about both individuals and organizations (" Can you believe that [in 1985], after years of absence from conferences, Claude Shannon simply showed up unannounced at the bar at the annual information theory conference?"; "do you know how much they had to pay the head of Caltech to get him to go to Saudi Arabia?", etc.).

Looking back, I realize that I would have liked to interest Saul in solving some of the mathematical questions raised in my work. I don't think I ever realized the extent to which he loved solving problems proposed by other people. Despite his significant contributions to the infrastructure of the computing world, Saul himself never seriously used computers. He was very proud of the fact that he could easily do calculations in his head. Until the age of 70, he did not use e-mail and never used a computer at home, although mobile phone he had (regular Email almost nothing came from him. I mentioned once that about a year ago I was studying the story of Ada Lovelace; he replied: " The story of Ada Lovelace as Babbage's programmer is so widespread that everyone seems to accept it as fact, yet I have never seen any sources on the subject.").

Saul's daughters threw a party for his 80th birthday a few years ago and created these interesting invitations:

Sol had some health problems, although this did not seem to greatly affect his rhythm of life. His wife's health had been deteriorating, quite sharply in the last few weeks. On Friday, Saul went to his office as usual, and on Saturday night he died in his sleep. His wife Bo survived him by just two weeks and died just two days before their 60th wedding anniversary.

Although Saul is gone, his work lives on in octillions of bits in the digital world.

Goodbye Sol. And from all of us - thank you.

The simplest type of feedback function is a linear function, for example, the modulo 2 sum of the contents of certain bits. This register is called a linear feedback shift register (LFSR). In general, the linear feedback function is given by the formula. Here c k= 1 if k The th bit is used in the feedback function, and c k= 0 otherwise. The symbol Å denotes addition modulo 2 (exclusive OR).

For example, consider an LFSR with a feedback function (see figure).

If the initial state of the register is 1111, then in subsequent tacts it will accept the following series of states: 1111, 0111, 1011, 0101, 1010, 1101, 0110, 0011, 1001, 0100, 0010, 0001, 1000, 1100, 1110, 1111, 1111, 1111 ...

The output sequence is formed from the least significant (rightmost) bit of the register. It will look like this: 1 1 1 1 0 1 0 1 1 0 0 1 0 0 0 1. It can be seen that the generated bit sequence is entirely determined by the initial state of the register and the feedback function. Since the number of possible register states is finite (it is equal to 2 L), then, sooner or later, the key sequence will begin to repeat itself. The maximum length of a non-repeating part of a key sequence is called its period T. The period depends on the feedback function. The maximum possible period is T max = 2 L-1 (the register accepts all possible states except 0000...0). The output sequence of the LFSR having the maximum period is called M-sequence.

To find out the conditions under which the LFSR will have a maximum period, the feedback functions assign a characteristic polynomial. Thus, the register given above as an example corresponds to a polynomial. Theoretical analysis shows that the LFSR will have a maximum period if and only if the polynomial P(x) is primitive. Below are some primitive polynomials recommended for use in practice. The table shows the powers of the variable x in polynomial notation. For example, the entry (31, 3) corresponds to a polynomial.

| № | P(x) | № | P(x) | № | P(x) |

| (39, 16, 23, 35) | (38, 5, 6, 27) | (32, 2, 7, 16) | |||

| (30, 6, 4, 1) | (31, 6) | (31, 7) | |||

| (31, 13) | (31, 25, 23, 8) | (33, 13) | |||

| (35, 2) | (47, 5) | (48, 9, 7, 4) | |||

| (47, 11, 24, 32) | (46, 18, 31, 40) | (53, 6, 2, 1) | |||

| (55, 24) | (57, 7) | (58, 19) | |||

| (59, 7, 4, 2) | (41, 27, 31, 32) | (61, 5, 2, 1) | |||

| (42, 30, 31, 34) | (51, 15, 24, 46) | (50, 17, 31, 34) |

LFSRs were originally designed to be implemented in hardware as a set digital circuits. Software implementations LFSRs are usually inferior in speed to hardware ones. To increase performance, it is advantageous to store the register state as an integer L-bit number, the individual bits of which correspond to the binary bits of the register. Then, bitwise operations (shift, masking, etc.) are used to access individual bits.

decryption - p i = D (k i, c i), as shown in Fig. 7.21.

Rice. 7.21.

Stream ciphers are faster than block ciphers. The hardware implementation of a stream cipher is also simpler. When we have to encrypt binary streams and transmit them at a constant speed, the best choice- use a stream cipher. Stream ciphers have greater protection against bit corruption during transmission.

In a modern stream cipher, each r -bit word in the plain text stream is encrypted using r -bit word in the key stream to create the corresponding r -bit word in the ciphertext stream.

Rice. 7.22.

A one-time pad is the perfect cipher. He's perfect. There is no method that would allow an adversary to recognize the key or statistics of the ciphertext and the plaintext. There is no relationship between the original and ciphertext. In other words, the ciphertext is a true random stream of bits, even if some samples of the original text are obtained. Eve cannot break the cipher unless she tries all possible random keystreams, which would be 2n if the plaintext size was n-bits. However, there is a problem with this. In order for the transmitter and receiver to share a one-time pad of keys, they must establish a connection every time they want to exchange information. They must somehow agree on a random key. So this perfect and ideal cipher is very difficult to implement.

Example 7.17

What is the appearance of the ciphertext when using the one-time pad cipher in each of the following cases?

A. The source text consists of n zeros.

b. The source text consists of n units.

V. The source text consists of alternating zeros and ones.

d. The source text is a random string of bits.

Solution

a. Because the ![]() , then the ciphertext stream will match the key stream. If the key is random, the ciphertext is also random. Parts of the original text are not stored in the ciphertext.

, then the ciphertext stream will match the key stream. If the key is random, the ciphertext is also random. Parts of the original text are not stored in the ciphertext.

b. Because the ![]() , where is the complement of the ciphertext stream is the complement of the key stream. If the keystream is random, then the ciphertext is also random; portions of the original text are not stored in the ciphertext.

, where is the complement of the ciphertext stream is the complement of the key stream. If the keystream is random, then the ciphertext is also random; portions of the original text are not stored in the ciphertext.

c. In this case, each bit in the ciphertext stream is either the same as in the key stream or its complement. Therefore the result is also random if the key stream is random.

d. In this case, the ciphertext is clearly random because performing an XOR operation on two random bits results in a random stream of bits.

Feedback shift register

One improvement to the one-time pad is (FSR - Feedback Shift Register). FSR can be implemented in either software or hardware, but for simplicity we will consider the hardware implementation. Feedback shift register comprises shift register and feedback functions, as shown in Fig. 7.23.

Rice. 7.23.

A shift register is a sequence of m cells from b 0 to b m-1, where each cell is designed to store a single bit. Cells are treated as an n-bit word, called at the beginning the "seed value" or source. Whenever a bit needs to be output (for example, from a signal at a certain time), each bit is shifted one cell to the right. This means that the value of each cell is assigned to the right adjacent cell and takes the value of the left cell. The rightmost cell b 0 is considered the output and gives the output value (k i ). The leftmost cell, b m-1, gets its value according to the value of the feedback function information. We denote the output of the function with feedback information b m . The feedback information function determines what values the cells have to calculate b m .

The feedback information shift register can be linear or nonlinear. Linear Feedback Shift Register (LFSR)

. Let us assume that b m is a linear function b 0, b 1,…..., b m-1, for which

A linear feedback shift register operates on binary digits, so the multiplication and addition are in the GF(2) field, so the value of C i is either 1 or 0, but C 0 must be 1 to obtain feedback information at the output. The addition operation is an EXCLUSIVE OR operation. In other words,

Example 7.18

Solution

Let's build a linear feedback shift register with 5 cells, in which .

If C i = 0, b i does not play a role in the calculation of b m, then this means that b i is not associated with the feedback information function. If c i = 1, b i is included in the calculation of b m. In this example, c 1 and c 3 are zeros, which means that we only have three connections. Figure 7.24 shows the circuit of a linear feedback shift register.

Rice. 7.24.

Example 7.19 ![]() Let's build a linear feedback shift register with 4 cells, in which . Show register value after 20 operations (shifts

Let's build a linear feedback shift register with 4 cells, in which . Show register value after 20 operations (shifts

Solution

), if the original value is (0001) 2 .

Figure 7.25 shows the design and use of a linear closed-loop shift register for encryption.

Rice. 7.25.

| Table 7.6. | present value | b 4 | b 3 | b 2 | b 1 | b 0 |

|---|---|---|---|---|---|---|

| k i | 1 | 0 | 0 | 0 | 1 | |

| 1 | 0 | 1 | 0 | 0 | 0 | 1 |

| 2 | 0 | 0 | 1 | 0 | 0 | 0 |

| 3 | 1 | 0 | 0 | 1 | 0 | 0 |

| 4 | 1 | 1 | 0 | 0 | 1 | 0 |

| 5 | 0 | 1 | 1 | 0 | 0 | 1 |

| 6 | 1 | 0 | 1 | 1 | 0 | 0 |

| 7 | 0 | 1 | 0 | 1 | 1 | 0 |

| 8 | 1 | 0 | 1 | 0 | 1 | 1 |

| 9 | 1 | 1 | 0 | 1 | 0 | 1 |

| 10 | 1 | 1 | 1 | 0 | 1 | 0 |

| 11 | 1 | 1 | 1 | 1 | 0 | 1 |

| 12 | 0 | 1 | 1 | 1 | 1 | 0 |

| 13 | 0 | 0 | 1 | 1 | 1 | 1 |

| 14 | 0 | 0 | 0 | 1 | 1 | 1 |

| 15 | 1 | 0 | 0 | 0 | 1 | 1 |

| 16 | 0 | 1 | 0 | 0 | 0 | 1 |

| 17 | 0 | 0 | 1 | 0 | 0 | 0 |

| 18 | 1 | 0 | 0 | 1 | 0 | 0 |

| 19 | 1 | 1 | 0 | 0 | 1 | 0 |

| 20 | 1 | 1 | 1 | 0 | 0 | 1 |

Note that the key stream is 1000100110101111 1001……. It looks, at first glance, like a random sequence, but if we look at a large number of transactions (shifts), we can see that the sequences are periodic. This repetition of 15 bits is shown below.

The stream key is generated using a linear feedback shift register pseudorandom sequence, in which sequences of length N are repeated. The flow is periodic. It depends on the generator circuit and initial information and can be no more than 2 m – 1. Each circuit generates m-bit sequences ranging from those containing all zeros to those containing all ones. However, if the initial sequence is all zeros, the result is useless - the original text would be a stream of all zeros. Therefore, such an initial sequence is excluded.