Automation tips. File database slowdowns - how to avoid (from recent experience) 1s 8.3 how to work if the network is slow

Read also

For various reasons, users of the 1C program from time to time encounter 1C performance problems. For example: a document takes a long time to process, a report takes a long time to generate, transaction errors, the program freezes, slow response to user actions, etc. By following our instructions, you can achieve significant success in the performance of the program and prevent exceeding the system limit. This is not a panacea for all ills, but most of the reasons for 1C slowdowns lie precisely in these issues.

1. Do not perform routine or background tasks while users are working

The first and main rule for system administrators is to schedule all background tasks to be completed outside of working hours. The system must be unloaded as much as possible in order to perform routine tasks (indexing, document processing, data uploading) and at the same time not interfere with users’ work. Neither the system nor the users will interfere with each other if they work at different times.

2. Do not exchange RIB data during the working hours of users

Although companies have recently been abandoning the RIB data exchange system in favor of the online mode and terminal access, it is not amiss to remember that while uploading and downloading exchange data, it is impossible to carry out documents and fully work in the program. If possible, this procedure, if it exists, should be performed at night using background jobs.

3. Increase PC performance in a timely manner, matching its power to real needs

Do not forget that the simultaneous operation of 30 and 100 users in the system produces different loads. Accordingly, if a quantitative increase in users is planned, the IT service should promptly consider with the company management the issue of expanding the machine fleet, purchasing additional memory or servers.

4. Software on which 1C runs

The 1C program is such that it works differently on operating systems. It is not known exactly why, but it is so. For example, the server version of a 1C database on Linux OS in conjunction with SQL Postgre is much slower than the same 1C database on Windows OS in conjunction with MS SQL. The exact reasons for this fact are not known, but apparently somewhere deep in the 1C platform there are compatibility problems with operating systems and non-Microsoft DBMSs. It is also worth deploying the system on a 64-bit server if you plan to place significant loads on the database.

5. Database indexing

Internal procedure of the 1C program, which “combs” the system from the inside. Set it to run as a background routine task at night and be calm.

6. Disabling operational batch accounting

The fact is that during the operational processing of documents, movements are recorded in registers, including batch accounting registers. Recording of batch accounting registers when posting documents can be disabled in the program settings. Once a month, it will be necessary to start processing the posting of documents in batches, for example, at a time when the load on the database is the least or when the fewest number of users are working.

7. RAM

Use the following formula:

RAM = (DB 1+DB 2+DB N) / 100 * 70

About 70% of the total physical volume of databases. 1C databases love to feed well on RAM. Don't forget this.

8. If possible, optimize self-written reports and processing with imperfect and outdated codes

During the life of a company, there is a need for writing reports and processing, as well as modifications to manage business processes and extract specific information. It’s all these improvements that can cause glitches and slow down work, because... a) some Kulibins may have once written heavy, incorrect code that is difficult for the program to execute and requires significant effort to execute; b) the code in which the processing or report is written may have become obsolete and requires revision and reprogramming. Use the rule: The less we change something in the program, the better.

9. Clear cache

A regular server reboot sometimes solves problems with the outdated 1C cache. Just try it. Unloading can also help – loading the information base through the configurator. And the most recent cleaning of the cache of a specific user is deleting folders in the 1C system directory of the form: kexifzghjuhfv8j33hbdgk0. But deleting cached user folders is the last thing, because... In addition to removing garbage, clearing the cache has unpleasant consequences in the form of deleting saved report settings and the user menu interface.

10. Reducing the physical volume of databases

More base means more resources. Naturally. Use standard 1C tools to collapse the database. Think about the possibility of giving up five years of data to improve productivity. And if you still need data from the last five years, you can always use a copy of the database.

11. Correct organization of architecture

In general, the architecture of the corporate information system must be correct. What do we mean by the right system? Comparability of the tasks assigned to the system with the available equipment and software. Plan the system together with: the system administrator (because he knows the machine fleet), the 1C programmer (because he knows the resource needs of 1C) and the head of the company (because he knows about the future growth or contraction of the company).

Users often complain that “1C 8.3 is slow”: document forms open slowly, documents take a long time to process, the program starts, reports take a long time to generate, and so on.

Moreover, such “glitches” can occur in different programs:

The reasons may be different. This is not restored documents, a weak computer or server, the 1C server is incorrectly configured.

In this article I want to look at one of the simplest and most common reasons for a slow program - . This instruction will be relevant for users of file databases for 1-2 users, where there is no competition for resources.

If you are interested in more serious optimization of client-server options for system operation, visit the section of the site.

Where are the scheduled tasks in 1C 8.3?

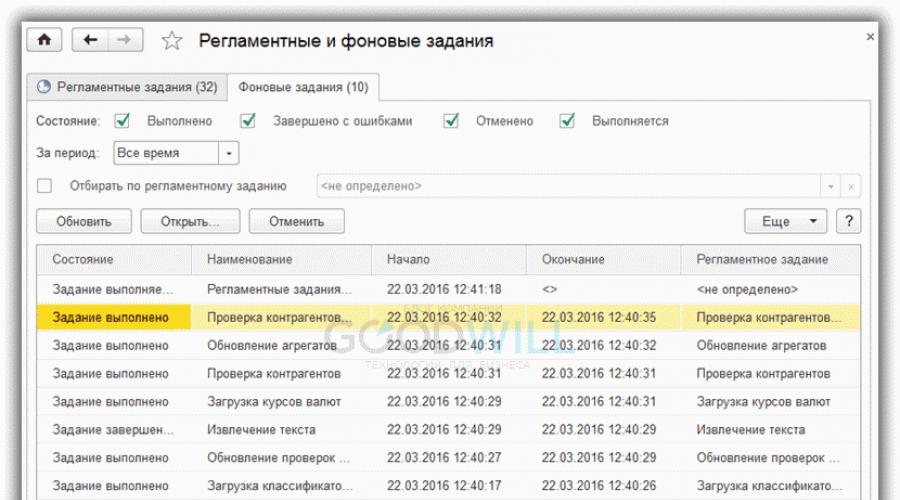

Before I had time to load the program, many background tasks were completed in 1C. You can view them by going to the “Administration” menu, then “Support and Maintenance”:

Get 267 video lessons on 1C for free:

This is what the window with completed tasks looks like:

And here is a complete list of all scheduled tasks that are launched:

Among these tasks you can see such as ““, loading various classifiers, checking the relevance of the program version, and so on. For example, I have no use for almost all of these tasks. I don’t keep currency records, I control the versions myself, and load classifiers as needed.

Accordingly, it is in my (and in most cases in your) interests to disable unnecessary tasks.

Disabling routine and background tasks in 1C 8.3

1C: Accounting is one of the most famous and most convenient accounting programs. Proof of this is its widespread distribution in all areas of activity: trade, production, finance, etc.

Unfortunately, like all computer programs, 1C: Accounting also experiences various crashes and freezes. One of the most common problems is slow system operation.

In order to understand the reasons for its occurrence and try to solve them, today’s article was written.

Eliminating common causes of slow 1C operation

1. The most common reason for slow program operation is a long time to access the 1C base file, which is possible due to errors on the hard drive or due to poor quality of the Internet connection, if cloud technologies are used. There may also be problems with the antivirus system settings.

Solution: perform a scan to eliminate errors and defragment the hard drive. Test Internet access speed. If the readings are low (less than 1 Mb/s), contact the provider's TP service. Temporarily disable anti-virus protection and firewall in the anti-virus system.

2. Perhaps the slow operation of the program is due to the large size of the database file.

To solve this problem open 1C in the “Configurator” mode, select “Administration” in the system menu, then “Testing and correction”. In the window, the “Compression of information database tables” item must be selected; the “Testing and correction” item below is active. Click "Run" and wait for the process to complete.

3. The next possible reason is outdated software or an outdated version of the program itself.

Way out of this situation: update the operating system software or install the latest version of the 1C program. For preventive purposes, always update to the latest version, which eliminates errors from earlier configurations.

To install the latest version of the 1C system, you need to enter the program in the “Configuration” mode, then from the menu go to “Service” -> “Service” -> “Configuration Update”, then select the default settings and click the “Update” button.

Does 1C start in two minutes? Does the document log take 40 seconds to open? Is the document held for almost a minute?

This is a familiar situation if you are using the file version with network access.

You can, of course, install a server and forget about the brakes, but if you only have 2-3 people working in 1C, and spending money on purchasing server licenses is not practical.

Symptoms:

The work of several users over the network with the same file (database) includes a network blocking mechanism. This forces the system to waste valuable time identifying open recording sessions and resolving conflicts accordingly. The main signs of blocking operation:

- fast user work with the database over the network in exclusive mode and extremely slow when several users work simultaneously.

- fast user work with a local database on the server and slow work over the network.

- The processor on the server is almost idle.

- Gigabit network card load is less than 5%.

- accesses to the file system are slightly less than 10 MB/sec.

- When trying to simultaneously post documents, one computer stalls for about a minute, and the second one crashes from 1C with the error text “failed to lock the table.”

- Starting 1C lasts about 3 minutes.

Tips that can help speed up the file database:

- Go to work in terminal access. Unfortunately, Windows 7 does not allow you to turn into a terminal server using standard tools - there is a maximum of one active connection. In this case, the remaining sessions do not terminate; you can reconnect under another user - “throwing out” the previous user, but without terminating his session. Therefore, you should transfer 1C to a server OS, where there are no such restrictions, or resolve the issue with a third-party utility.

- Disable the use of the IPv6 network protocol, configure addressing on the “old” IPv4.

- Add 1C processes to the Windows firewall exceptions, as well as to the antivirus exceptions, or disable them completely (more risky, but a simple test showed an increase in the speed of document retransfer with Avast antivirus disabled significantly!)

- Start indexing full-text search in 1C or turn it off completely

- Run testing and correction of the database, checking with the ChDbfl utility (the utility is located in the “bin” folder of the installed technology platform).

- Run the "Check configuration" item in the configuration (if the configuration is not standard, this may be useful).

- Disable unnecessary functional options (the less unnecessary in the managed interface, the faster it works, as a rule).

- Set up user rights (the less unnecessary in the managed interface, the faster it works, as a rule).

- Start recalculating the totals and restoring the sequence (a significant increase can only occur if the totals have not been restored for a long time).

- Specify "Connection speed - low" in the database list settings.

- Defragmentation of a disk with a file database.

- Database convolution (may be useful if the database is large, for example, for several years).

- Hardware upgrade - faster hard drive (SSD), new switch, processor, memory, etc.

- Install on a web server, access using a thin client.

After completing all these steps, the 1C file database can work much faster. In some cases, it started in 10 seconds, and the speed of document transfer increased 12 times.

P.S. In the UT 11.1 configuration, launching file 1C using network access to a shared folder is unrealistic, because Even the fastest solid-state drive, RAM and processor run into network locks, and the work of more than one user becomes virtually impossible.

Self-written small configurations can work quite quickly even in the file version.

We often receive questions about what slows down 1c, especially when switching to version 1c 8.3, thanks to our colleagues from Interface LLC, we tell you in detail:

In our previous publications, we already touched on the impact of disk subsystem performance on the speed of 1C, but this study concerned the local use of the application on a separate PC or terminal server. At the same time, most small implementations involve working with a file database over a network, where one of the user’s PCs is used as a server, or a dedicated file server based on a regular, most often also inexpensive, computer.

A small study of Russian-language resources on 1C showed that this issue is diligently avoided; if problems arise, it is usually recommended to switch to client-server or terminal mode. It has also become almost generally accepted that configurations on a managed application work much slower than usual. As a rule, the arguments given are “iron”: “Accounting 2.0 just flew, but the “troika” barely moved,” of course, there is some truth in these words, so let’s try to figure it out.

Resource consumption, first glance

Before we began this study, we set ourselves two goals: to find out whether managed application-based configurations are actually slower than conventional configurations, and which specific resources have the primary impact on performance.

For testing, we took two virtual machines running Windows Server 2012 R2 and Windows 8.1, respectively, giving them 2 cores of the host Core i5-4670 and 2 GB of RAM, which corresponds to approximately an average office machine. The server was placed on a RAID 0 array of two WD Se, and the client was placed on a similar array of general-purpose disks.

As experimental bases, we selected several configurations of Accounting 2.0, release 2.0.64.12 , which was then updated to 3.0.38.52 , all configurations were launched on the platform 8.3.5.1443 .

The first thing that attracts attention is the increased size of the Troika’s information base, which has grown significantly, as well as a much greater appetite for RAM:

We are ready to hear the usual: “why did they add that to this three,” but let’s not rush. Unlike users of client-server versions, which require a more or less qualified administrator, users of file versions rarely think about maintaining databases. Also, employees of specialized companies servicing (read updating) these databases rarely think about this.

Meanwhile, the 1C information base is a full-fledged DBMS of its own format, which also requires maintenance, and for this there is even a tool called Testing and correcting the information base. Perhaps the name played a cruel joke, which somehow implies that this is a tool for troubleshooting problems, but low performance is also a problem, and restructuring and reindexing, along with table compression, are well-known tools for optimizing databases. Shall we check?

After applying the selected actions, the database sharply “lost weight”, becoming even smaller than the “two”, which no one had ever optimized, and RAM consumption also decreased slightly.

Subsequently, after loading new classifiers and directories, creating indexes, etc. the size of the base will increase; in general, the “three” bases are larger than the “two” bases. However, this is not more important, if the second version was content with 150-200 MB of RAM, then the new edition needs half a gigabyte and this value should be taken into account when planning the necessary resources for working with the program.

Net

Network bandwidth is one of the most important parameters for network applications, especially like 1C in file mode, which move significant amounts of data across the network. Most networks of small enterprises are built on the basis of inexpensive 100 Mbit/s equipment, so we began testing by comparing 1C performance indicators in 100 Mbit/s and 1 Gbit/s networks.

What happens when you launch a 1C file database over the network? The client downloads a fairly large amount of information into temporary folders, especially if this is the first, “cold” start. At 100 Mbit/s, we are expected to run up against the channel width and the download can take a significant amount of time, in our case about 40 seconds (the cost of dividing the graph is 4 seconds).

The second launch is faster, since some of the data is stored in the cache and remains there until the reboot. Switching to a gigabit network can significantly speed up program loading, both “cold” and “hot”, and the ratio of values is respected. Therefore, we decided to express the result in relative values, taking the largest value of each measurement as 100%:

As you can see from the graphs, Accounting 2.0 loads at any network speed twice as fast, the transition from 100 Mbit/s to 1 Gbit/s allows you to speed up the download time by four times. There is no difference between the optimized and non-optimized "troika" databases in this mode.

We also checked the influence of network speed on operation in heavy modes, for example, during group transfers. The result is also expressed in relative values:

Here it’s more interesting, the optimized base of the “three” in a 100 Mbit/s network works at the same speed as the “two”, and the non-optimized one shows twice as bad results. On gigabit, the ratios remain the same, the unoptimized “three” is also half as slow as the “two”, and the optimized one lags behind by a third. Also, the transition to 1 Gbit/s allows you to reduce the execution time by three times for edition 2.0 and by half for edition 3.0.

In order to evaluate the impact of network speed on everyday work, we used Performance measurement, performing a sequence of predetermined actions in each database.

Actually, for everyday tasks, network throughput is not a bottleneck, an unoptimized “three” is only 20% slower than a “two”, and after optimization it turns out to be about the same faster - the advantages of working in thin client mode are evident. The transition to 1 Gbit/s does not give the optimized base any advantages, and the unoptimized and the two begin to work faster, showing a small difference between themselves.

From the tests performed, it becomes clear that the network is not a bottleneck for the new configurations, and the managed application runs even faster than usual. You can also recommend switching to 1 Gbit/s if heavy tasks and database loading speed are critical for you; in other cases, new configurations allow you to work effectively even in slow 100 Mbit/s networks.

So why is 1C slow? We'll look into it further.

Server disk subsystem and SSD

In the previous article, we achieved an increase in 1C performance by placing databases on an SSD. Perhaps the performance of the server's disk subsystem is insufficient? We measured the performance of a disk server during a group run in two databases at once and got a rather optimistic result.

Despite the relatively large number of input/output operations per second (IOPS) - 913, the queue length did not exceed 1.84, which is a very good result for a two-disk array. Based on this, we can make the assumption that a mirror made from ordinary disks will be enough for the normal operation of 8-10 network clients in heavy modes.

So is an SSD needed on a server? The best way to answer this question will be through testing, which we carried out using a similar method, the network connection is 1 Gbit/s everywhere, the result is also expressed in relative values.

Let's start with the loading speed of the database.

It may seem surprising to some, but the SSD on the server does not affect the loading speed of the database. The main limiting factor here, as the previous test showed, is network throughput and client performance.

Let's move on to redoing:

We have already noted above that disk performance is quite sufficient even for working in heavy modes, so the speed of the SSD is also not affected, except for the unoptimized base, which on the SSD has caught up with the optimized one. Actually, this once again confirms that optimization operations organize information in the database, reducing the number of random I/O operations and increasing the speed of access to it.

In everyday tasks the picture is similar:

Only the non-optimized database benefits from the SSD. You, of course, can purchase an SSD, but it would be much better to think about timely maintenance of the database. Also, do not forget about defragmenting the section with infobases on the server.

Client disk subsystem and SSD

We discussed the influence of SSD on the speed of operation of locally installed 1C in the previous material; much of what was said is also true for working in network mode. Indeed, 1C quite actively uses disk resources, including for background and routine tasks. In the figure below you can see how Accounting 3.0 quite actively accesses the disk for about 40 seconds after loading.

But at the same time, you should be aware that for a workstation where active work is carried out with one or two information databases, the performance resources of a regular mass-produced HDD are quite sufficient. Purchasing an SSD can speed up some processes, but you won’t notice a radical acceleration in everyday work, since, for example, downloading will be limited by network bandwidth.

A slow hard drive can slow down some operations, but in itself cannot cause a program to slow down.

RAM

Despite the fact that RAM is now obscenely cheap, many workstations continue to work with the amount of memory that was installed when purchased. This is where the first problems lie in wait. Based on the fact that the average “troika” requires about 500 MB of memory, we can assume that a total amount of RAM of 1 GB will not be enough to work with the program.

We reduced the system memory to 1 GB and launched two information databases.

At first glance, everything is not so bad, the program has curbed its appetites and fit well into the available memory, but let’s not forget that the need for operational data has not changed, so where did it go? Reset to disk, cache, swap, etc., the essence of this operation is that data that is not needed at the moment is sent from fast RAM, the amount of which is not enough, to slow disk memory.

Where it leads? Let's see how system resources are used in heavy operations, for example, let's launch a group retransfer in two databases at once. First on a system with 2 GB of RAM:

As we can see, the system actively uses the network to receive data and the processor to process it; disk activity is insignificant; during processing it increases occasionally, but is not a limiting factor.

Now let's reduce the memory to 1 GB:

The situation is changing radically, the main load now falls on the hard drive, the processor and network are idle, waiting for the system to read the necessary data from the disk into memory and send unnecessary data there.

At the same time, even subjective work with two open databases on a system with 1 GB of memory turned out to be extremely uncomfortable; directories and magazines opened with a significant delay and active access to the disk. For example, opening the Sales of goods and services journal took about 20 seconds and was accompanied all this time by high disk activity (highlighted with a red line).

To objectively evaluate the impact of RAM on the performance of configurations based on a managed application, we carried out three measurements: the loading speed of the first database, the loading speed of the second database, and group re-running in one of the databases. Both databases are completely identical and were created by copying the optimized database. The result is expressed in relative units.

The result speaks for itself: if the loading time increases by about a third, which is still quite tolerable, then the time for performing operations in the database increases three times, there is no need to talk about any comfortable work in such conditions. By the way, this is the case when buying an SSD can improve the situation, but it is much easier (and cheaper) to deal with the cause, not the consequences, and just buy the right amount of RAM.

Lack of RAM is the main reason why working with new 1C configurations turns out to be uncomfortable. Configurations with 2 GB of memory on board should be considered minimally suitable. At the same time, keep in mind that in our case, “greenhouse” conditions were created: a clean system, only 1C and the task manager were running. In real life, on a work computer, as a rule, a browser, an office suite are open, an antivirus is running, etc., etc., so proceed from the need for 500 MB per database, plus some reserve, so that during heavy operations you do not encounter a lack of memory and a sharp decrease in productivity.

CPU

Without exaggeration, the central processor can be called the heart of the computer, since it is it that ultimately processes all calculations. To evaluate its role, we conducted another set of tests, the same as for RAM, reducing the number of cores available to the virtual machine from two to one, and the test was performed twice with memory amounts of 1 GB and 2 GB.

The result turned out to be quite interesting and unexpected: a more powerful processor quite effectively took on the load when there was a lack of resources, the rest of the time without giving any tangible advantages. 1C Enterprise can hardly be called an application that actively uses processor resources; it is rather undemanding. And in difficult conditions, the processor is burdened not so much by calculating the data of the application itself, but by servicing overhead costs: additional input/output operations, etc.

conclusions

So, why is 1C slow? First of all, this is a lack of RAM; the main load in this case falls on the hard drive and processor. And if they do not shine with performance, as is usually the case in office configurations, then we get the situation described at the beginning of the article - the “two” worked fine, but the “three” is ungodly slow.

In second place is network performance; a slow 100 Mbit/s channel can become a real bottleneck, but at the same time, the thin client mode is able to maintain a fairly comfortable level of operation even on slow channels.

Then you should pay attention to the disk drive; buying an SSD is unlikely to be a good investment, but replacing the drive with a more modern one would be a good idea. The difference between generations of hard drives can be assessed from the following material: Review of two inexpensive Western Digital Blue series drives 500 GB and 1 TB.

And finally the processor. A faster model, of course, will not be superfluous, but there is little point in increasing its performance unless this PC is used for heavy operations: group processing, heavy reports, month-end closing, etc.

We hope this material will help you quickly understand the question “why 1C is slow” and solve it most effectively and without extra costs.